Anyone had an experience with using aws lambda’s and Eventstore?

We were playing with the idea of making some of our system serverless, but my concern is the connection to the Eventstore (share connection which is always established)

While I don’t have personal experience, I have talked people doing something similar. Here are the key takeaways:

- Forget about running esdb subscrptions on a lambda

- Instead, your command handling lives in a lambda, then sticks the position of the write result into something like sns fanout + sqs

- your projection living in lambda then reads the position off its queue and compares it against the last position it stored. if seen position > stored position it reads from stored position (while also skipping it as reading is inclusive WRT position) -> end and applies to the read model

So where does the write to ES actually happen?

From my perspective - you should write the event in the Lambda and then publish to SNS or SQS (or Kinesis, Kafka, whatever queue you prefer) position of the write result. Then another lambda (or set of lambdas - I suggest lambda per projection), will be triggered by queue, and you can do logic as @Joao_Braganca described in the last bullet point.

oskar,

When you say “write the event in the Lambda” I assume you mean create a connection to the Eventstore, and AppendToStream?

@steven.blair - yes, exactly. If you have a concern about the time needed to open the connection, you may try to memorize it. See, e.g. https://technology.amis.nl/2019/10/31/state-management-in-serverless-functions-connection-pooling-in-aws-lambda-leveraging-memoized-functions/.

Thanks for the help guys.

So, I would look at replacing my ES javascript projections and subscriptions and use a Function instead that is being fed from SQS (the published event?)

Yes, that’s what I’d suggest.

You can also run a mixed approach and run the subscription client as a container on EC2 or Fargate task. Such could be used to subscribe and handle projections or be just thin pass through subscribing and pushing the event to SNS or SQS. Using SNS has the benefit that you could push all the events there and do the routing to SQS that will trigger your lambda with projections.

I also saw that AWS recently added Docker support for lambda - that can probably open some options.

Ok, we are going to have a crack up building a prototype using AWS Lambda and see how we get on.

we heavy rely on projections for building enriched events, so it’s hard to see how we could replace that at the moment.

@steven.blair then maybe you could start by making the write model serverless and keep the subscriptions at EC2 or Fargate? That would give you an option to take this step by step.

Could you elaborate more on the “building enriched events” part? Does it mean that you’re doing the event transformations?

Oskar,

Yeah this approach sounds like a good start point for us (writing serverless and a permanent subscription)

As for the event transformations, we have projections which subscribe to various categories, and using a partition, holds some useful state from various different events.

We then emit a nice chunky event that makes updating the read model a chore.

It’s a really import part of our system, and not something I want to change.

There might be other technologies out there that could be used to get the same result (we had a quick look at Kafka but it was Linux only).

we found our read model was having to perform the heavy lifting when an event arrived, and with this approach it’s pretty slick and minimal code.

Ok, understood. Thank you for an explanation. I agree that putting the queue as the man in the middle will cut off a lot of benefits of using subscriptions.

If you have a constant load, then EC2 would be even cheaper than AWS Lambda, if not then you can consider Fargate Tasks as they’re a middle ground between Lambda and EC2. You could publish events to SQS to trigger the Fargate Tasks and start processing subscription. Having that, you could cut some costs if, e.g. the majority of traffic happens during the day, but not so much at night.

Keep us posted on your progress

For our proof of concept we have settled on:

- AWS Lambda for writing to a self hosted Eventstore (EC2)

- Self hosted Eventstore continues to run projections

- Self hosted service for persistent subscriptions which will call AWS Lambda for writing to our RDS*

*do you think there would be in value writing the enriched events to SQS, then using SQS - Lambda or is this just another component for the sake of it?

I see two places where SQS (or other queues) may help:

- trigger Fargate Task (with subscriptions) after storing an event,

- decoupling the RDS writing from the subscription/projections. You could benefit from the built-in retries, DLQ etc. If you add SNS, then you could also get notifications (if, e.g. something went wrong), etc.

I think that you can start without them and add them later if needed. However, I think that it would be good to consider and at least do some PoC to verify what’s the better approach for you.

Btw, the gRPC client doesn’t really have an always-open connection, it is stateless for writes. The only part that we can consider “stateful” are continuous reads and subscriptions.

As it comes to EC2, I don’t remember when I had to deal with VMs in production last time. We moved to containers a while ago and it solved most of the deployment and scaling issues.

That’s interesting Alexey.

Our perceived connection sharing problem could be replaced if you started using gRPC.

Currently, we are still running 5.0.8 using the tcp client.

This is really useful thread. Thanks!

I’ve recently joined a company for a greenfield project and I see event sourcing as something that could bring a lot of value to the domain we’re dealing with.

I’ve used Event Store DB in the past with a good result (in VM and as a container). I haven’t used AWS though, and I’m pretty new to serverless but AWS + serverless lambda is the priority and I’d like to try out Cloud EventStore DB in AWS also and the “new” gRPC client for C#.

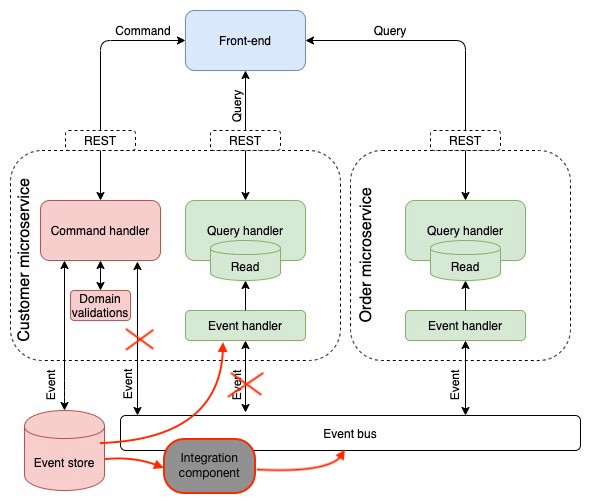

I’m used to apps with rest api, command handlers that retrieve the event stream and instantiate an event sourced aggregate with all the domain logic, and finally appending the new events in the EventStoreDb stream, sending back a result as an http response and moving on. As per the other read models and projections, I’ve used catch-up subscriptions to EventStoreDb to write the projections in another NoSql DB and/or to map the domain events to some kind of integration events that are published in some message bus for cross bounded context requirements. Pretty standard as per Alexey Zimarev’s nice post

But this lambda’s serverless approach still makes me uncomfortable, especially when listening to some people saying that there should be a lambda per message type (really? why?). I can’t see why that level of granularity would help, especially when having some domain logic whose aggregate relies on different events to bring up current state.

Sorry if I state the obvious. I am still getting my head around all these new serverless and AWS concepts.

My preferred option for a single bounded context is to have one Lambda function (project?) that takes ANY command from the API Gateway and acts as the command handler and has all the aggregate code. I hope this is normal. It should be pretty quick handling the command, delegating in the aggregate to validate or reject the transaction and writing events in EventStoreDb. Am I correct in saying that it’d be weird to have one lambda per command type?

Then as some of you have suggested, it makes sense to have some other process (EC2?) that subscribes to the EventStoreDb, does whatever mapping and projections, or even interacts with some web socket to notify of any result to the UI, and publishes events in a message bus.

For that I’m thinking of using AmazonMQ or maybe SNS/SQS with MassTransit. I’ve used NServiceBus and MassTransit in the past and I really like MassTransit. Haven’t considered Kafka or Kinesis and at this stage probably I’d go with message bus technology since the order is not vital and the map between domain events and integration events should take care of sorting out some things. Please feel free to recommend me ANYTHING.

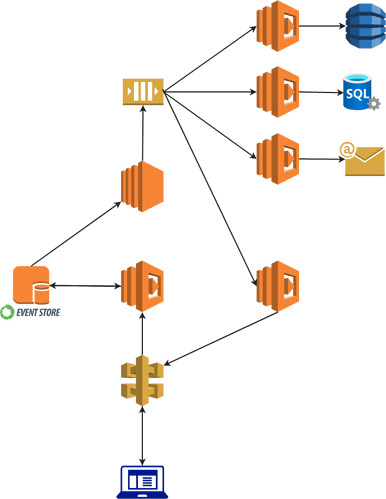

Later on, I’d have some more Lambdas to subscribe to these “integration events” for various purposes, reporting, UI notification, emails, process managers or whatever.

Is this moreless similar to what you guys were talking about? Has anybody any link, article or much appreciated advice on this? It’s difficult to find AWS + serverless + EventSourcing info out there, other than the AWS “recommended” approach where there is always some Kinesis in between

PS: Something like this?

Am I correct in saying that it’d be weird to have one lambda per command type?

yes , I find that weird as well some kind of grouping is always necessary imho

for the general setup : the lambdas accepting the commands & emitting events in the store can then just send a SomeNewEventApperead { some interresting data } through sns/sqs pushed to any downstream lambdas connecting to the store , doing their work and then going to sleep again

basically you don’t have to send the actual events, just some trigger to wake up downstream consumers.