Good day. When initializing a volume that will contain an EventStore database only, is there a cluster size to set on the volume that would be better/worse from a performance standpoint? This would be on a Windows 2012 R2 Azure instance volume created with no write caching. This would be using normal 256MB chunks.

-Thanks

From discussions with others the size did not matter too much and the defaults seemed reasonable. I would have to actually benchmark them to give you more details. One thing that was important IIRC was to enable read caching on the local disk (warmed up reads then came from the local disk not from blob storage). Using blob storage as the underlying store should help with some other things btw as data is written three times when using blob storage.

Can you let us know what your throughputs you are looking for are? I believe Rinat was fairly trivially able to get up to about 6000 messages/second but if you needed to go much higher then we might need to do a bit of research on tuning for this particular scenario.

Cheers,

Greg

Thanks Greg. I set the cluster size to 8192 since the files are all sized the same and as you indicated it may not matter as much due to the nature of the access. I changed the storage caching to “read” as I had previously set it to none. The performance was sub par. I am not sure if the disks are geolocated with the servers (from I/O performance monitoring), they look to be “not near” electrically. The caching may help.

As far as throughputs, I do not have a method for determining what we are seeing on locally managed systems. We are now just modifying our deployment architecture to break these services away from the single server design and allowing the ES and read-model on separate machines from the front end (IIS) application. Still seeing enormous memory consumption on the ES machine with DBverify set to “true.” Since the machine is (now) separated from the IIS machine, this consumption may not be as much an issue if it is indeed the nature of the product to consume so much (unmanaged) memory. In our testing (on single machine), IIS worker processes do not relieve the usage to to allow expansion and crash as a result. This happens on machines with less than 16GB of RAM with similarly sized EventStoreDb’s. Separating the services across separate VMs will allow us to relax the resource requirements on the IIS front end significantly.

In my present testing scenario with 57 chunks (256MB each) (equating to about 5 million events), the Dataserver (running GES and MongoDb) consumed every ounce of RAM (14GB in this case) during startup. Post successful start (which took quite some time), MongoDb start seemed to take back some RAM for itself to run in, but it was minimal (< 256MB). The two seemed to co-exist and were being managed on separate disk volumes. Normal runtime after startup showed the machine with 180MB of free memory (out of 14GB). The EventStore.Singlenode service itself was consuming almost 500MB of memory at “full on” runtime.

Would really like to understand if the memory consumption is “normal” and what will occur over time as the ES grows to 10M, 20M, … events?

Thanks,

Rick

"As far as throughputs, I do not have a method for determining what we are seeing on locally managed systems. We are now just modifying our deployment architecture to break these services away from the single server design and allowing the ES and read-model on separate machines from the front end (IIS) application. Still seeing enormous memory consumption on the ES machine with DBverify set to “true.” Since the machine is (now) separated from the IIS machine, this consumption may not be as much an issue if it is indeed the nature of the product to consume so much (unmanaged) memory. In our testing (on single machine), IIS worker processes do not relieve the usage to to allow expansion and crash as a result. This happens on machines with less than 16GB of RAM with similarly sized EventStoreDb’s. Separating the services across separate VMs will allow us to relax the resource requirements on the IIS front end significantly.

In my present testing scenario with 57 chunks (256MB each) (equating to about 5 million events), the Dataserver (running GES and MongoDb) consumed every ounce of RAM (14GB in this case) during startup. Post successful start (which took quite some time), MongoDb start seemed to take back some RAM for itself to run in, but it was minimal (< 256MB). The two seemed to co-exist and were being managed on separate disk volumes. Normal runtime after startup showed the machine with 180MB of free memory (out of 14GB). The EventStore.Singlenode service itself was consuming almost 500MB of memory at “full on” runtime. "

This does not happen in 3.0 as we decided to bypass the os cache on db verify but the behaviour is completely configurable within windows. What you are seeing is things being loaded into the windows file cache. You can tell the file cache how much memory it should be using by default I believe it will just use all of your memory (releasing as other things need the memory). The behaviour of the windows file cache is configurable see fsutil from the windows command line http://technet.microsoft.com/en-us/library/cc785435.aspx

As for the 500mb of the process itself this is as well configurable. By default we will cache the last 2 chunks in unmanaged memory of the process (you can change this with -c 1 -c 0 etc on the command line) 2 chunks equate to roughly 500mb of memory. Usually on a system that has been running for a while our actual memory usage is closer to 150mb assuming no caching. I would recommend running with -c 2 or -c 1. The reason for this is you tend to get many reads hitting the last bits of data that you have written in most environments.

Thanks. I will try that. I am going to put 3.0 on this test system and try some of the configurations. In our present environments I will see about manipulating the file caching to come to some sort of happy medium allowing other processes to consume memory as needed.

Let us know that 3.0 does not show the behaviour as well just so we can be sure that its not doing it

Cheers,

Greg

Yes of course. Thanks

btw as a side note remember you will have to reboot the server to clear the cache (it won’t retroactively uncache things that are previously cached :))

Right…

Greg,

I setup EventStore.SingleNode 3.0.0RC2 on my test environment:

Windows Server 2012R2 (2xCores, 14GB RAM)

C: OS/app

D: Temp Storage

E: EventStoreDb (database, write-cache disabled)

F: MongoDb (database)

Binaries running from C:

Configs on C:

Configurations are set to defaults for the most part.

<instance name=“Test”

dbPath=“E:\SecureData\App_Data\EventStoreDb\Test”

filePath="…\ThirdParty\EventStore\EventStore.SingleNode.exe"

tcpPort=“1113”

httpPort=“2113”

runProjections=“false”

skipDbVerify=“false”

cachedChunkCount=“0” />

Looking at the link you posted for FSUTIL on Windows Server, the parameters to set for managing the memory caching will not decrease or set the amount of paged or non-paged memory usage on the file system.

From link: (looks as though you can only increase the amount of paged and non-paged usage)

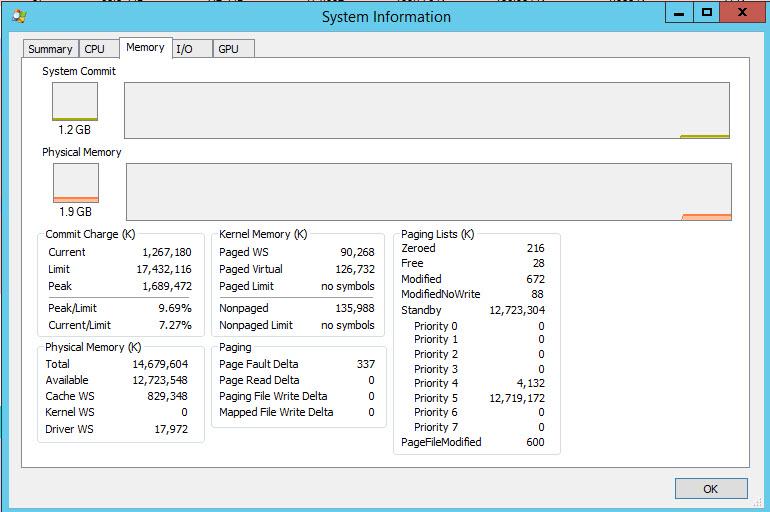

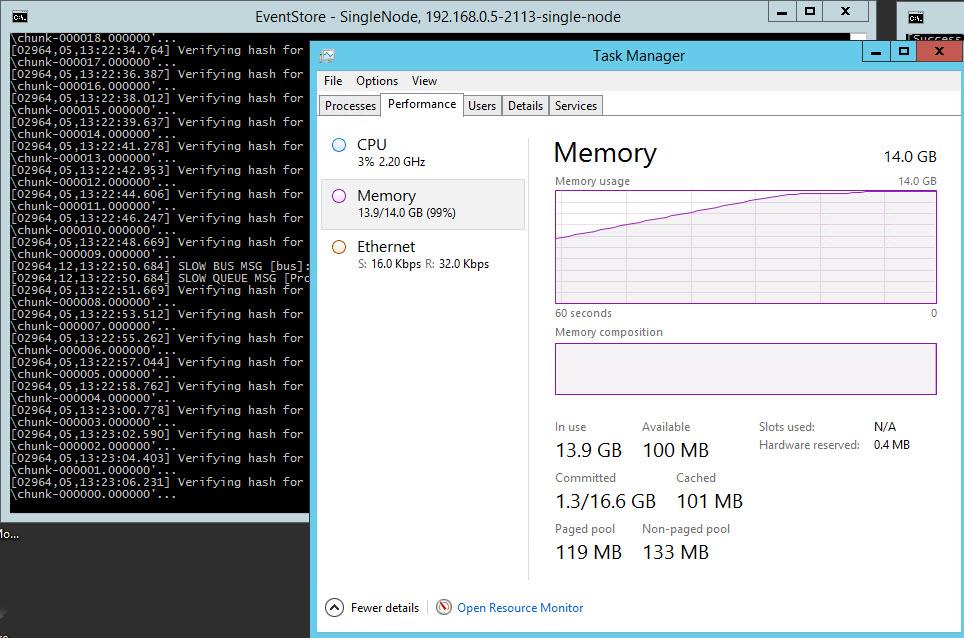

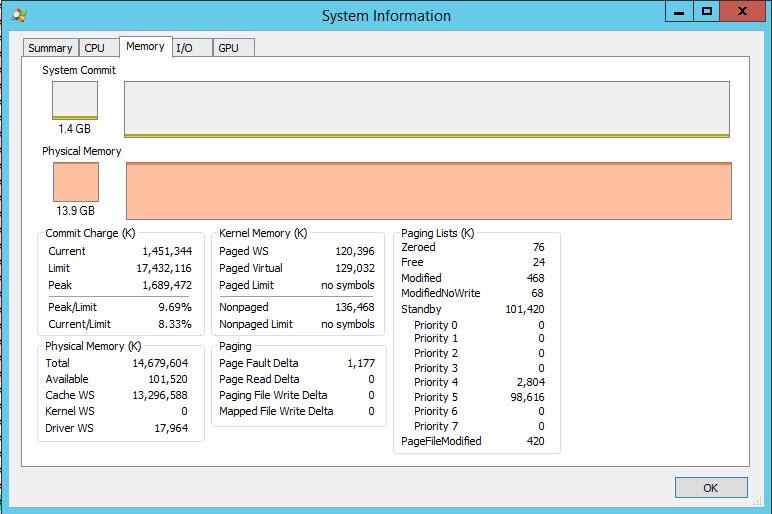

Result of test showed all memory on server was consumed at ES start up. (screenshot attached)

Subsequent start of MongoDb alongside, showed slight fluctuation in usage.

Memory consumption is 99%

Evenstore.SingleNode commit is 155MB

As each chunk was addressed at ES startup, available “physical” memory was reduced.

Attached is Sysinfo screen as well.

These are the current observations.

Rick.

@James or @Rich can we verify this behaviour? I only have linux here. This behaviour should NOT be happening as we use directio when validating chunks.

Cheers,

Greg

I’ll investigate - not certain that those commits were in RC2 though, will also need to check that

Ah ok that would make sense then.

The DirectIO stuff was not in RC2, it went in pretty much immediately afterwards in response to reports of this.

Rick, can you confirm if this is fixed building from the dev branch?

James

Guys,

I got the files from ‘dev’, built them, and have staged them on my test server.

Results are good. Loaded 57 chunks and the server is using only what it needs.

Will work the system and see how it performs.

Thanks,

Rick