So I’ve a nice pretty dashboard about to be populated by the projections I’ve created - and now I want to at the bottom show the live events by consuming the stream directly

I’ve got

/streams/github?format=json

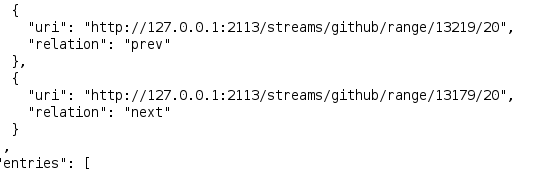

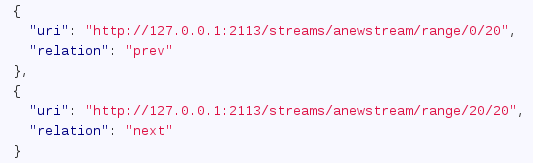

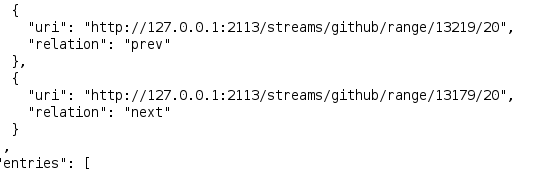

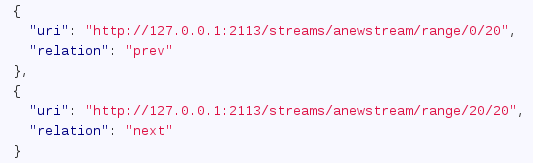

I see we have links to prev/next, although prev appears to step foward in time in the stream and ‘next’ appears to step backwards in time - which is contrary to what the docs say

My links

Documentation links

I assume at some point this behaviour changed

Regardless, I don’t think I use the links if I’m to consume this stream from my app - instead I should be polling regularly from the ‘last seen id’ and stepping forward in the stream until I receive fewer events than I asked for - correct?

I can even model this as a stream in node.js - fancy that.

Also, am I going to get put on Santas naughty list for doing this?

fromStream('github')

.whenAny(function(s, e) {

if(!e.body) return

if(!s.events) s.events = []

s.events.push(e.body)

while(s.events.length > 20)

s.events.shift()

})

(Or in other words, I assume there is a better way to ask “Get me the 20 most recent ones please”)

why not just read the twenty most recent from the stream?

As mentioned above, when I ask for /streams/github I’m not given the most recent, I can page forward still quite a lot and I was wondering why this was

I was expecting to be able to poll

/streams/github?format=json

And just shove the data directly into my realtime visualisation (as old entries would naturally just disappear)

Let me check why it returns data from the beginning.

that should work that way… your receing the oldest event first?

Okay, I know what is happening - I thought I had stopped the process that was pumping events into the store, I hadn’t, I had merely backgrounded it

So by the time I got to look at the stream, there were more events in there

Pebcak

herp derp derp

On that note though, if I am looking at the event stream - I am getting a load of links to those events, whereas my soul-destroying projection gives me an array of the actual events themselves

What is the Approved Method of getting the stream of actual events themselves rather than links to the events?

Or is that “Just query for the goddamned events and put a goddamned proxy in there because they’re cacheable”

we will be adding this (have a card) though let me explaon why links… it seems inefficient at first but think about it from a http caching perspective…

also you have http keepalives and pipelining

That’s what I thought, it’s much better than constantly querying a stream that has to create itself out of events rather than looking at (mostly) cached events

I do indeed, providing I can find the documentation for doing that sort of thing in node

still has single connection though