Hi,

In our company we are looking at how we could take a production EventStore of data and produce an anonymised version of this data in a new EventStore (anonymising only certain fields in certain events like first name, surname and email addresses of contacts) as we currently have a policy where we cannot use customer data in our test/staging environments but because the EventStore is immutable then we cannot edit the information directly in the copy. Therefore I’m guessing the only way of doing this is to create some process that copies events from the production EventStore to another with an anonymisation app inbetween? Is this what others have done previously?

If not, how have you got around the fact that you shouldn’t use production data in test? My concern is that we want to use feature staging going forward which means we’d want up to date versions of the EventStore prod data each evening but I’m guessing to create an application that will copy millions of events from a prod EventStore to an anonymised version would be timely.

Any thoughts?

Kind regards,

Mark

You can even do it in real time. Setup a CatchUpAllSubscription from your cluster have a small function that anonymizes the event f(event)->event then write it to the testing cluster. This will keep your testing cluster live updated to the production system. I might also recommend backing up occasionally so you can set back to known good starting points.

Cheers,

Greg

Hi Mark!

We are currently deploying staging with empty eventstore, and then

start an import of all events from prod with a filter transformation

in the middle to anonymize data, as you outline. Going forward we will

be changing this however so that all PII event data is encrypted using

a user-specific key (stored as an event itself) and an environment

key. When we copy prod data to staging (which has a separate

environment key), the PII will simply be unreadable and we will detect

this and replace it in UI with fake data for testing purposes. This

also makes backups safer, as they don't contain any PII. From a legal

point of view it is my understanding that with this scheme in place we

don't have any PII "data at rest", only PII "data in flight" as it is

encrypted on read.

I've described this in earlier GDPR related threads on this list if

you want details on it.

/Rickard

We have a test environment that gets refreshed with fully anonymised data from live

- In live we have process with a subscription to all that anonymises the events in memory and sends them to a second event store in live, which is backed up overnight.

- Then we can restore it into any other environment and do performance/functionality/diagnostic testing.

- Beware of referential integrity for string values (eg usernames) in events and stream names, which has meant that we compromise and use pseudo randomisation that also retains string lengths (Randomisation that is consistent)

We used to have laying around somewhere a program that would actually do this for you. Basically it just used a subscribetoall then wrote to whatever node you gave it, To be fair it was < 100 loc. Might this be something useful for us to provide in the future?

An alternative would be to build in a new “mode” into eventstore where you could declare that a node is a “follower”. This would do the same but would be built into the node itself. All writes would be disabled and the replication purely asynchronous (but it would work off the normal replication channel as opposed to an outside thing). Would this have value for you?

Cheers,

Greg

Hi Greg, That sounds very good. If we had “staging” eventstore setup to replicate automatically from “production” evenstore, we’d also want the staging application to be able to write to it.

It would have to “branch” activity, perhaps stop replicating for a while, during staging tests. After staging tests, it would need to revert changes and catch up to the “production” eventstore again.

Thoughts?

One way to achieve this might be to have it do the “reset” when it starts. So a developer can hit “restart” and the staging eventstore automatically resets and catches up to production.

how big is the production data size?

In my case, its less than 10million events at present, growing at 10million per year.

At such small sizes I would just use the catchupsubscription (I know you think 10gb is big :P, but it fits on a micro-USB so its not big data).

Use SubscribeToAllFrom(…)

vvv

public void SubscriptionMethod(Event e) {

var newe = Anonymize(e); //do whatever you want to transform

otherdb.WriteEvent(newe);

}

It is not the fastest method but is quite simple and flexible. It should get you going by lunch time tomorrow.

A requirement of the “thought exercise” we’re doing here is that staging apps must be able to write to the staging event store, which branches its data from the data of the production event store.

At some point, triggered by developers most likely, the staging event store needs to drop the branched data and re-synchronize with the production eventstore.

I take it your suggestion would require completely erasing the staging eventstore data, and starting it again from the very beginning before running the custom data transfer utility, also from the beginning of time?

Yes, at 10Gb data, we could achieve this in a small amount of time compared to the total time developers would be spending on the problem that requires such a process.

You will run into a problem writing to a staging event store while synchronizing.

Stream foo : production

FooOccured

BarOccred

Stream foo : stagin

FooOccured

BarOccured

SomeOtherEventYouAreTesting

When BazOccured gets written to production you will get an error if you use expected version. Instead you likely want to write with ExpectedVersion.Any from your catchupsubsccription. As a side note it being I have done this before you probably want to add some metadata to events in production {source : ‘production’} etc so you can tell this is a “real” event and not one someone has been playing with in staging.

As you are writing in staging you will frequently want to come back to a known position. The easiest way to do this is occasionally take a back up of the system just after it has caught up and before you write any new events to it. This way when you want to blow it away to get rid of staging events you can revert to the last backup and move forward from there. As example in your case you would take a backup the moment it catches up the 10GB database. You can then use it for a week or whatever then fall back to the backup from a week ago and move forward as opposed to resyncing the entire 10 GB (you only get the new events that happened in the last week. Does this make sense?

Cheers,

Greg

BTW having built this before can I suggest a design?

Use your catchupsubscription to read all the events. Upon the receive handler call a factory to get a transformation (factory defaults to a null transformation). The transformation returns you the new event. Write it. It is clean, simple, easy to extend, and easy to manage. I consider it a core tool in most production implementations. Done properly the transformations will also be either auto-discovered or explicitly configured (I tend to use discovery with explicit override).

The cool thing about the tool is that it has many uses overall with a production system. The classic one is building out realtime updating staging and dev models (that also supports returning data as either anonymized or brought forward to a later version

!). A second one is that it can be used for handling upgrades in production when you want to depreciate an old version of an event (or an old event in general you now want to remove).

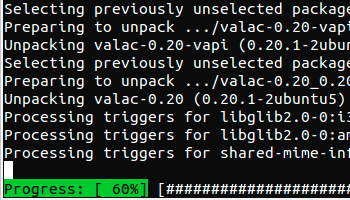

Again it should be simple to manage and I would even go the extra mile and add a status (how far is your catchupsubscription from the position of the last event). I use linux so I like to use something like this

Hope this helps,

Greg

Now that I am thinking about it, this is the kind of little tool is would likely be useful for us to ship (its only a few days worth of work to make a “nice” one and its quite a useful tool to have sitting around for various reasons.

Yes. This is fantastic.

My initial thought - make it work in two ways:

-

A simple app that “just works” from commandline/environment variable configuration

-

A nuget package that allows a user to build their own application, injecting various logic.

If you don’t get around to making it in the next month or so yourself, I’ll be happy to kick it off myself and open-source it.

How would the app “reset” the destination eventstore?

“It depends”

I am sure that is not what you wanted to hear.

What I would do is replicate over a clean instance as of tie point T. Take a backup then use as you wish. When you want to “reset”, restore backup, catchup until now, take backup, then do whatever writes you want. So long as we are talking a short period between restores (say 1-2 weeks etc) this process should be in seconds to minutes depending on your throughput.

Run the Synchronizing tool:

a) Stops the destination eventstore

b) Pushes stored backup database files into destination eventstore, replacing existing files

c) Starts the destination eventstore.

d) Get stream checkpoints from destination eventstore

e) Pushes all transformed events to destination eventstore

f) Creates a new backup of the “unblemished” database files.

An app like this could work if it’s installed on the same machine or container as the destination eventstore.

Could you make it work if it’s installed on a different machine or container?

I don’t need it that complicated for myself - with my “micro-sd” size data, I can just do the entire restore from the beginning everytime, of course.