Here is an interesting tidbit. Using the test client with the ES db on my os drive 10000/s avg. On the striped array… 12000/s.

Likely it’s the fsyncs that are slowing you down not data throughput

I am also running some performance tests. When I run TestClient with “wrfl 1 100000” I get the following output:

[07984,10,19:28:23.071] Connection [127.0.0.1:1113] was closed cleanly.

[07984,10,19:28:23.071] Completed. Successes: 100000, failures: 0 (WRONG VERSION: 0, P: 0, C: 0, F: 0, D: 0)

[07984,09,19:28:23.071] Command exited with code 0.

[07984,10,19:28:23.071] 100000 requests completed in 5551ms (18014.77 reqs per sec).

I added the following test to the getting-started-with-event-store solution as our project uses a similar pattern:

[Test]

public void WritePerformanceTest()

{

Stopwatch writeStopwatch = new Stopwatch();

int numberOfEvents = 5000;

var aggregateToSave = new TestAggregate(Guid.NewGuid());

writeStopwatch.Start();

for (int i = 0; i < numberOfEvents; i++)

{

aggregateToSave.ProduceEvents(1);

_repo.Save(aggregateToSave, Guid.NewGuid(), d => { });

}

writeStopwatch.Stop();

double eventsPerSecond = numberOfEvents/writeStopwatch.Elapsed.TotalSeconds;

Console.WriteLine("{0} events written in {1} seconds. Events per second = {2}",

numberOfEvents, writeStopwatch.Elapsed.TotalSeconds, eventsPerSecond);

}

The output from this test is: 5000 events written in 17.9621338 seconds. Events per second = 278.363364602039

I expected that this test would perform much better than 278 events per second, what is the bottle neck in the getting-started-with-event-store code?

Thanks,

Philip

Not that I think it will make a huge difference, but the only thing that should be within the stopwatch is the _repo.Save(…). Event production is not part of the event store.

Your test tests sequential writes. WRFL command does async writes, it sends a lot of writes simultaneously, not waiting for previous to complete. This test tests EventStore’s ability to handle a lot of simultaneous write requests.

Your sequential tests are heavily dependent on disk flush time (for HDD it is around 20-30ms or more per flush, so you will get not more than 30-50 sequential writes per second, for SSD I observe latency of write at 2-3ms, so you can get around 300-500 sequential writes).

But when we do WRFL, we send a lot of writes with ExpectedVersion.Any having 1000-2000 write requests sitting in the queue at the server. That allows EventStore to batch writes and amortize flush time among many write requests, not just single one, as in case of sequential writes.

Btw, to test sequential speed in our TestClient, there is WRFLW command (WRite FLood Waiting). See help for it to see available parameters.

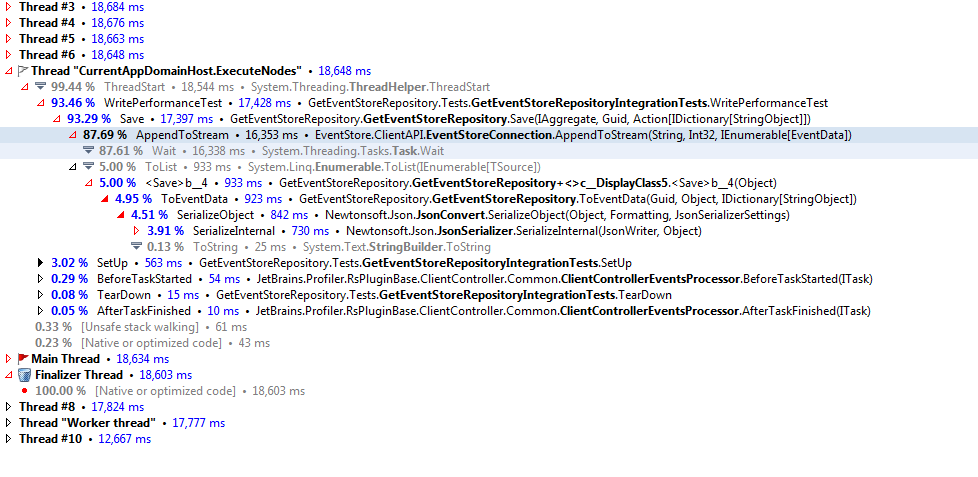

See the attached screenshot from dotTrace. 5000 calls to AppendToStream took 16.3second.

I that expected based on my test results from TestClient?

It’s because you do blocking write. Try using AppendToStreamAsync and continuation task, you’ll see almost negligible time spent in ClientAPI. Sync version of AppendToStream is blocked waiting for I/O to complete, so no wonder. You CPU is not hurt, don’t worry.

Would it be a good idea to update the gist of the CommonDomainRepository to use the asynch version? I know a lot of people are using that at least as a starting template.

Phil

Not really. Just try using multiple threads calling it.

Thanks Andrii, the test now has the following output:

5000 events written in 0.3593246 seconds. Events per second = 13914.9949655548

So when trying to deal with large volume of single events obviously AppendToStreamAsync is the best solution for fast throughput. Are there any pitfall to be aware of when using async?

In what cases would you not use async?

Asynchronous is just allowing you to send multiple concurrent requests. Threads do this as well.

Why choose threads vs using AppendToStreamAsync?

Asyncs don’t waste thread resources, they allow them to be reused for other operations, but programming with asyncs are harder and code becomes very non-linear. The flow of program becomes very hard to follow and error handling becomes especially non-obvious.

Though, if you can use C# 5 and async/wait constructs, there is no need to use blocking operations, the program flow will seem sequential, but there will be no wasting of resources. Plus error handling with async code becomes just usual – try/catch constructs as you would do with blocking operations.

Andrii,

I am very interested in how to handle errors correctly when using async. I haven’t moved to .Net 4.5 yet and I am not familiar with async/wait constructs.

Could you provide an example of how to use AppendToStreamAsync with async/wait constructs?

Thanks,

Philip

Do two writes (or n) then await all of them.

If you look at the source code, the synchronous versions are really async underneath:

public void AppendToStream(string stream, int expectedVersion, params EventData[] events)

{

AppendToStreamAsync(stream, expectedVersion, (IEnumerable<EventData>) events).Wait();

}

Where AppendToStreamAsync is implemented as:

public Task AppendToStreamAsync(string stream, int expectedVersion, IEnumerable events)

{

Ensure.NotNullOrEmpty(stream, “stream”);

Ensure.NotNull(events, “events”);

EnsureActive();

var source = new TaskCompletionSource();

var operation = new AppendToStreamOperation(source, Guid.NewGuid(), _settings.AllowForwarding, stream, expectedVersion, events);

EnqueueOperation(operation);

return source.Task;

}

So calling Wait() on the task which represents the enqueued operation will cause your code to block until it is complete. You could have n tasks, and do:

Task.WaitAll(new[] { t1, t2, t3 })

``

You could do this with .NET 4 as well as 4.5, however C# 5 has keywords which improve the readability of such code.

Cheers,

James

On Wednesday, 13 March 2013 14:19:55 UTC, pogorman wrote:

Here is the snippet of code (with some non-critical parts missing) that does asynchronous sequential writes to multiple streams and retry in case of errors, etc.

Just to feel the difference between using Tasks explicitly and using async/await + exception handling in C# 5, try to rewrite this code into explicit tasks with continuations.

private async Task LaunchNodeConnection(int nodeIndex, int streamSize, int writerCount)

{

var connSettings = ConnectionSettings.Create()

.EnableOperationsForwarding()

.LimitAttemptsForOperationTo(50)

.LimitConcurrentOperationsTo(500)

.LimitOperationsQueueTo(5000)

.KeepReconnecting()

.SetOperationTimeoutTo(TimeSpan.FromSeconds(10))

.SetReconnectionDelayTo(TimeSpan.FromSeconds(5))

.OnErrorOccurred((x, exc) => Log.ErrorException(exc, “Error occurred on connection to Node {0}”, nodeIndex))

.OnClosed((x, exc) => Log.Info(“Connection to Node {0} closed.”, nodeIndex))

.OnConnected(x => Log.Info(“Connected to Node {0}”, nodeIndex))

.OnDisconnected(x => Log.Info(“Disconnected from Node {0}”, nodeIndex))

.OnReconnecting(x => Log.Info(“Reconnecting for Node {0}”, nodeIndex));

var conn = EventStoreConnection.Create(connSettings);

var nodeInfo = new NodeInfo(nodeIndex);

await conn.ConnectAsync(new IPEndPoint(nodeInfo.ExternalIp, nodeInfo.ExternalTcpPort));

var progress = new Progress(Stopwatch.StartNew(), 0);

var tasks = new Task[writerCount];

for (int writer = 0; writer < writerCount; ++writer)

{

tasks[writer] = NodeWriterProcess(nodeIndex, streamSize, writerCount, writer, conn, progress);

}

await Task.WhenAll(tasks);

Log.Info(“DONE WRITING ALL WRITERS TO NODE {0}!!!”, nodeIndex);

}

private class Progress

{

public readonly Stopwatch Stopwatch;

public long All;

public Progress(Stopwatch stopwatch, long all)

{

Stopwatch = stopwatch;

All = all;

}

}

private async Task NodeWriterProcess(int nodeIndex,

int streamSize,

int writerCount,

int writer,

EventStoreConnection conn,

Progress progress)

{

var stream = string.Format(“some-stream-at-node-{0}-writer-{1}”, nodeIndex, writer);

for (int i = 0; i < streamSize; ++i)

{

int trial = 0;

bool written = false;

var evnt = GenerateEvent(nodeIndex, writer, i);

while (!written && trial < 20)

{

trial += 1;

try

{

await conn.AppendToStreamAsync(stream, i == 0 ? -1 : i, new[] {evnt});

var total = Interlocked.Increment(ref progress.All);

if (trial > 1)

Log.Info(“Written to {0} (w: {1}, e: {2}) on trial {3}.”, stream, writer, i, trial);

if (total > 0 && total % 1000 == 0)

{

Log.Info(“Written {0} events on node {1}. ({2:0.00} reqs/s, {3:0.00} reqs/s per writer)”,

total,

nodeIndex,

total/progress.Stopwatch.Elapsed.TotalSeconds,

total/progress.Stopwatch.Elapsed.TotalSeconds/writerCount);

}

written = true;

}

catch (Exception exc)

{

Log.DebugException(exc, “Exception during writing event #{0} at node {1}, writer {2} and trial {3}.”, i, nodeIndex, writerCount, trial);

}

}

if (!written)

throw new Exception(string.Format("{0}th event on node {1}, writer {2} wasn’t written!", i, nodeIndex, writer));

}

Log.Info(“DONE WRITING TO NODE {0} FOR WRITER {1}!!!”, nodeIndex, writer);

}